As with children, ethical input with robots needs to come before, not after, developing other skills, says Daniel Glaser

Every week comes a new warning that robots are taking over our jobs. People have become troubled by the question of how robots will learn ethics, if they do take over our work and our planet.

As early on as the 1960s Isaac Asimov came up with the ‘Three Laws of Robotics’outlining moral rules they should abide by. More recently there has been official guidance from the British Standards Institute advising designers how to create ethical robots, which is meant to avoid them taking over the world.

From a neuroscientist’s perspective, they should learn more from human development. We teach children morality before algebra. When they’re able to behave well in a social situation, we teach them language skills and more complex reasoning. It needs to happen this way round. Even the most sophisticated bomb-sniffing dog is taught to sit first.

If we’re interested in really making robots think more like we do, we can’t retrofit morality and ethics. We need to focus on that first, build it into their core, and then teach them to drive.

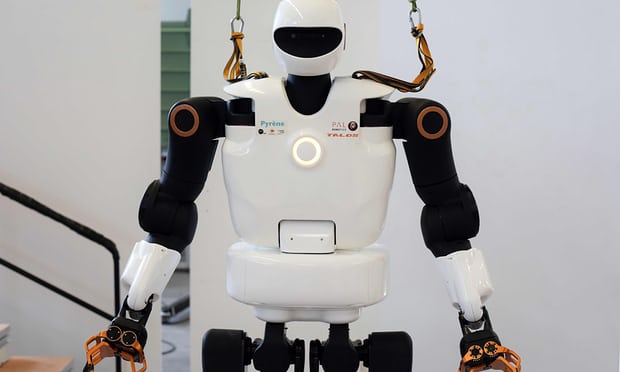

Photo credit: Programming challenge: morality should be built into the core of a robot. Photograph: Remy Gabalda/AFP/Getty Images

This article was first published on the Guardian website as part of the A neuroscientist explains series.